Case Study: The Metaethics of AI

From Classical to Quantum

Nicholas T. Harrington

Project Q – Centre for International Security Studies

University of Sydney

2023

Artificial intelligence (AI), developed and governed by classical, physical computation is proving to be a disruptive force. Microsoft, in partnership with OpenAI, has placed a one billion USD bet that next-generation AI language models will replace traditional ‘search platforms’ like Google. Their latest model, ChatGPT — which raced to 100 million active users in only two months — has already replaced workers in almost half the firms surveyed in February 2023 (GPT-3 is free, while GPT-4 is a subscription service). According to those deploying ChatGPT, the AI model is shedding human capital for tasks such as writing code, copywriting and content creation, customer support, preparing job descriptions, responding to applications, drafting interview requisitions, and summarising meetings and documentation.

Artificial intelligence developed and governed by quantum algorithms and computation would be orders of magnitude more transformative.

According to the ‘Montréal Declaration’ (a public statement on the responsible development of artificial intelligence), this emerging technology may very well, “restrict the choices of individuals and groups, lower living standards, disrupt the organization of labor and the job market, influence politics, clash with fundamental rights, exacerbate social and economic inequalities, and affect ecosystems, the climate and the environment.” Recently, Geoffrey Hinton, the so-called ‘Godfather of AI,’ left Google after almost a decade dedicated to deep learning and artificial intelligence for the express purpose of warning the world about the dangers and risks of AI. In particular, Hinton harbours deep concern about its military application and the prospect we might ‘lose control’ of our creation, leading, ultimately, to our annihilation. It is worthwhile, therefore, to ponder the ethical considerations that are inevitably entangled with the development and deployment of quantum artificial intelligence (QAI).

Given, however, that quantum AI exists only in the future, this inquiry must assume the form of ‘meta-ethical analysis.’ Although the consequences of QAI are largely unknowable, one might conjecture that presumed positive and negative trajectories of classical AI would be amplified in a quantum context. Additionally, the twentieth century provides a number of useful historical analogies — of technologies with disruptive military, economic, and political impact for international, domestic and interpersonal relations. Ethical questions linked to the various transformations that accompanied the atomic bomb, mass surveillance, and the internet more broadly provide a reasonable basis for such a meta-analysis.

I. Quantum, What’s the Difference?

It is entirely reasonable to wonder if there is anything particular or special about quantum AI as opposed to its classical counterpart. What distinguishes QAI from any other emerging technology or innovation? And, if QAI is somehow qualitatively different (by virtue of being extraordinarily different, quantitatively), does this difference warrant independent ethical consideration? To answer these questions, we need to understand a little about how each technology works and then consider how they differ, fundamentally.

Classical Artificial Intelligence

Classical AI has evolved over the past seventy years to constitute an architecture of interconnected and interacting models. These models, at their core, convert information from the real world (be that images, sounds, or words) into numbered objects (often referred to as ‘tokens’) that are given a probability of being logically associated with other objects of a like and unlike kind.

Schematic Representation of an Artificial Intelligence (AI) System. Source.

The most primitive function that drives AI computation is ‘if’ / ‘then.’ Think of chess. If the white rook moves three spaces, then (there are a variety of possible response moves). Over the years, however, the functions and coding that structure AI models have become increasingly complex, with machine learning algorithms taking centre stage. Today, rather than having to program the entire set of possible moves and permutations, the system will assign probabilities to the associations between particular objects based on every segment of new information, and each interaction between itself and a user. This method of ‘training from context’ ultimately creates a situation where user instructions generate outputs based on our statistically preferred associations. Critically, however, this means classical AI doesn’t know why the words “desiccated” and “horoscope” lack meaningful association. Instead, the model was trained that these two words rarely (if ever) appear together in context. Whenever the AI output the sentence “he read his desiccated horoscope each morning,” the researcher told the model ‘no,’ as many times as was required so this result was statistically insignificant. This rules-based, logical, statistical, object-oriented form of modelling also ensures classical AI can’t generate results outside those the model was specifically programmed to produce. As a language model, ChatGPT cannot interpret images; and, until it has been trained on data written in the Sotho language, it is of little use to many people in the Kingdom of Lesotho. In other words, classical AI isn’t really thinking and it doesn’t actually understand what it’s outputting. Instead, classical AI is simulating the cognitive processes computer scientists assume are taking place when human beings reason. Here, therefore, we encounter the fundamental point of departure between classical and quantum AI: assumptions concerning the nature of human cognition.

Quantum Artificial Intelligence

Quantum AI remains very much in its infancy. Indeed, the most significant steps towards QAI took place almost thirty years ago, when Peter Shor (1994) developed an eponymous algorithm to perform ‘prime factorization’ exponentially faster than was classically possible, and Lov Grover (1996) developed his algorithm so single items could be searched out of an unsorted database in a square root of the time it took conventional computation. The Shor and Grover algorithms represent the backbone of quantum machine learning. It is only in the last decade or so that new algorithms have joined their ranks (HHL; QSVM; Quantum K-means Clustering; and Quantum Dimensionality Reduction), adding to the speed, complexity, and capacity of quantum computation and, thus, (theoretically) quantum artificial intelligence.

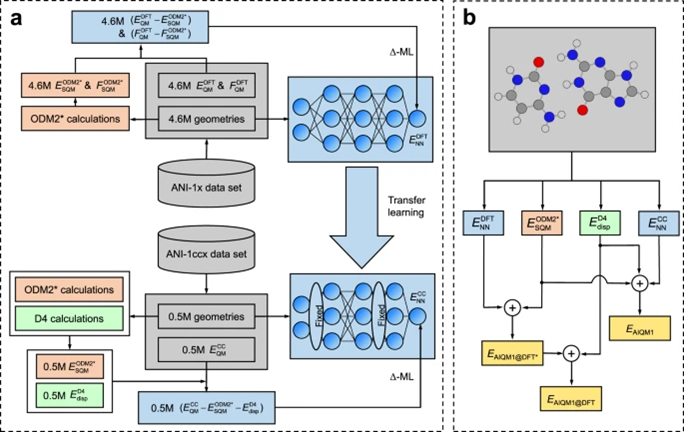

Schematic Representation of a Quantum AI System (a training neural network (NN); b representation of components). Source.

Aside from advances in machine learning derived from quantum information processing, the field of Quantum Decision Theory has proven fertile ground for the theorisation of QAI. According to Quantum Decision Theory, “realistic human decision-making can be interpreted as being characterized by quantum rules. This is not because humans are quantum objects, but because the process of making decisions is dual, including both a rational evaluation of utility as well as subconscious evaluation of attractiveness.” In other words, the limits of classical AI are precisely coextensive with the distance between the classical and the quantum conception of human cognition. Artificial intelligence, if it seeks to move beyond the mere appearance of simulation and mimicry, will need to incorporate a learning framework structured by quantum effects.

So far, however, progress on incorporating meaningful quantum effects such as superposition and entanglement remains largely theoretical. In principle, if classical neural networks could be made quantum, with quantum neurons (entangled and in superposition), quantum AI would have the hardware to complement its algorithms. Indeed, conceptually, the relationship between a classical computing bit and a quantum qubit is very much like the relationship between a classical network neuron and a quantum network neuron. In the final analysis, precisely the same physical challenges that confront quantum computing confront quantum AI (i.e., quantum decoherence due to uncertainty effects and the measurement problem). These difficulties notwithstanding, the parallelism between quantum computing and quantum AI may hold the promise of their respective flourishing.

Entangled Quantum Future

A growing body of research suggests machine learning may be the key to resolving certain intractable problems in quantum computing. In particular, addressing error rates that arise from the physical challenges posed by energy transfer and interference. An algorithm known as Quantum Support Vector Machine (QSVM) is currently being deployed on quantum computing hardware, showing promising results. Simultaneously, it is argued that only quantum computers are capable of running the kinds of algorithms that distinguish quantum AI from its classical counterpart. This scientific situation can either be viewed as a kind of stand-off or the cracking of a dam. The complementarity between quantum challenges in computing and artificial intelligence either describes a strict theoretical and physical limit or invites a symbiosis that will usher in the technological revolution sought by each field.

II. Quantum Ethics in the Future

Although Quantum Artificial Intelligence Labs are popping up in industry and university settings, there is presently no such thing as ‘quantum AI’ — which means we find ourselves in the fortunate position of being able to ponder ethical considerations in advance. There are three spaces across and within which quantum AI will likely have effects that warrant scrutiny: the international system, the nation-state, and interpersonal interactions.

The International System

With respect to the international system, a useful heuristic is to imagine oneself in the 1940s, knowing everything we now know about nuclear weapons and their proliferation, and consider what kinds of safeguards, practices, or regulations we ought to have put in place then, but didn’t, because we didn’t know the future.

Quantum AI (both its pursuit and realisation) is likely to amplify and accelerate divisions between emerging blocs. Something like ‘quantum nationalism’ is already evident, with Russian, Chinese, and U.S. leaders suggesting that quantum breakthroughs (AI included) are the next frontier in relative power maximization. Not only will the first nation to harness the power of quantum AI have a global military advantage, but the economic dividends could be extraordinary. According to PwC, artificial intelligence may add 15.7 trillion USD to the global economy. Quantum AI could enable an actor to not only contribute to this sum but also control its flow.

The ethical reply to this ‘quantum AI arms race’ is similar to that proposed by Niels Bohr when he considered the nuclear arms race of his time. Bohr suggested that the nations of the world, rather than covet their research, ought to enter into a programme of joint scientific cooperation and knowledge sharing. The world will be a much safer place if China, Russia, and the U.S. work together rather than independently on a quantum AI breakthrough. Imagine for a moment — in the context of the prevailing geopolitical mood arising from Russia’s invasion of Ukraine and the one-China principle — if either one of these three nations suddenly cracked the technology. The balance of power shift would be dramatic and likely lead to considerable conflict intensification if quantum AI facilitated national interests unimpeded.

The Nation-State

At the level of the nation-state, a useful thought experiment is to imagine how the ‘war on terror’ of the early 2000s, or the measures to limit transmission of the COVID-19 virus during the 2020/2021 global pandemic might have been shaped had governments at the time had quantum AI at their disposal. On the one hand, governments equipped with quantum AI would be extremely efficient at revenue assessment, collection, and redistribution.

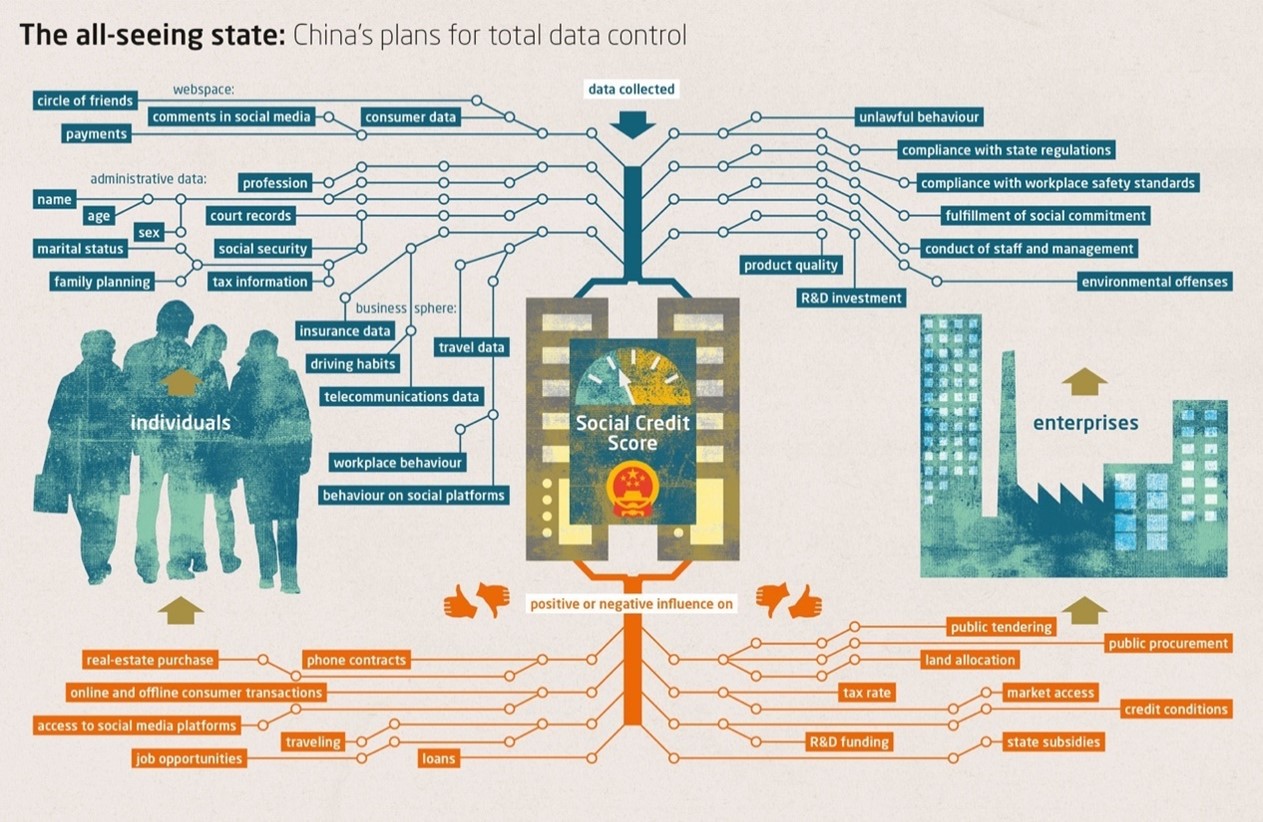

On the other hand, QAI combined with the arbitrary collection of metadata, necessitates a complete reconsideration of what is understood by public and private spheres, as well as the concept of privacy itself. In China, AI is already being used to fortify state power and extend repression, with social scoring and facial recognition excluding populations from certain spaces and opportunities. Once granted, governments rarely return sovereign privileges. And, there is no guarantee that the next administration will be as benign as the last.

Representation of China’s Social Scoring Programme. Source.

The ethical response to the prospect of unparalleled state surveillance, intrusion, and control — i.e., the very nature of state power itself — is to establish strict and appropriate legal limits concerning governmental use of QAI. Additionally, non-partisan bodies and an ombudsperson might be instituted to monitor and report on governmental use of such technology. The key consideration is full disclosure and transparency. The state must make public its intentions concerning QAI, and seek some manner of democratic approval prior to deployment. The public must be prepared to scrutinise probable future circumstances where national security is used as the pretext for expansive new QAI powers.

Interpersonal Interaction

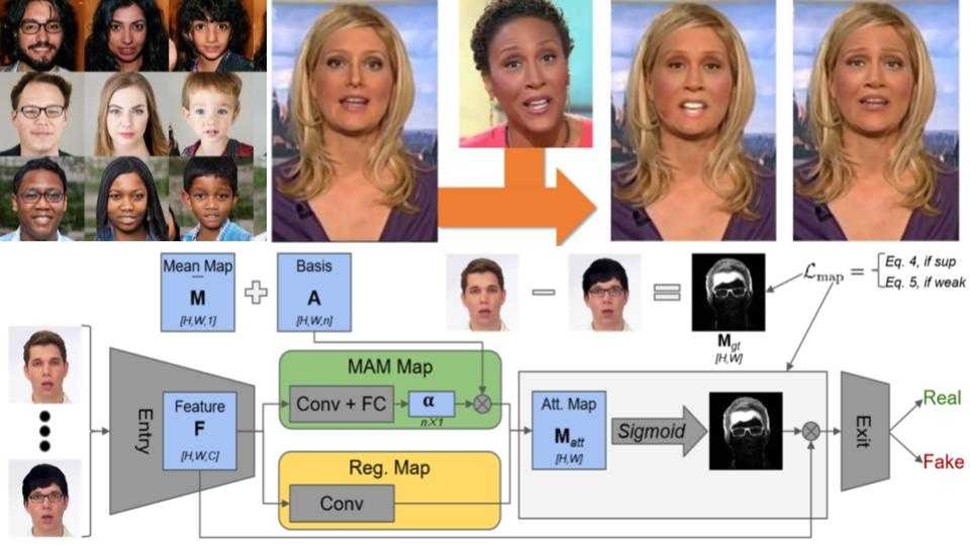

At the level of interpersonal interaction, it is helpful to consider how elections, referendums, and other democratic processes might be affected in the context of quantum AI — given what we now understand of the interaction between algorithmic interference and social media during the Brexit referendum and U.S. Presidential election of 2016. Furthermore, if contemporary phishing and email scams are a problem, imagine how much more persuasive and effective digital crime becomes in the age of QAI. Revenge porn, deep fakes, and other forms of digital identity exploitation become issues of unfathomable social concern in the context of QAI. In the not-too-distant future it will be virtually impossible to distinguish (using our natural senses) between an AI-generated video and a video of the same person shot IRL (in real life). Imagine receiving a video on WhatsApp from one of your parents asking you to send them money. With your social media account, QAI would be able to identify your mother, generate a video with perfect simulated audio, spoof their bank account (or Bitcoin wallet), and deceive you into parting with your funds.

Schematic Representation of Deepfake Process. Source.

The ethical solution to the myriad interpersonal issues that arise in the context of quantum AI, is similar to that being deployed concerning the use of ChatGPT in schools and universities. Parallel to the development of QAI, we must develop detection and screening tools that enable individuals to quickly and effectively identify anything that is the product of or has been generated with the assistance of QAI. Regulators could then mandate that every personal and institutional device be installed with QAI detection software (similar to virus detection) that instantly flags and marks all QAI content.

III. Quantum Ethical Conclusions

As with every significant technological breakthrough, quantum artificial intelligence is a double-edged sword. For every medical miracle, a million workers might be displaced. In exchange for frictionless tax collection, we may lose any notion of privacy. If the West achieves quantum AI supremacy, democratic ideals may live another day (although there are no guarantees). On the other hand, if China secures that winner-takes-all prize, the global order might be in for a rude awakening. Our primary saving grace, however, is that quantum AI doesn’t exist (yet). This means we are in the unique position of being able to fortify ourselves in the present against the most important future ethical considerations. In the end, the ethical prescriptions to ameliorate and temper QAI’s most malign trajectories are profoundly proper deontological principles: cooperation, transparency, protection, and accountability. If the development of QAI was undertaken based on international cooperation, we might avoid the worst aspects of quantum nationalism. If governments committed to deploy QAI under appropriate democratic conditions, the prospect of state repression is significantly reduced. Finally, if QAI was regulated in the public interest, with a safety-first orientation, its use for nefarious purposes is greatly curtailed. The final ‘white pill’ for those concerned about a QAI future is that we have to deal with its little sister — classical AI — first. In other words, society, states, and the international system get forewarning of what will come, and, therefore, need less convincing about the complementary dangers and promises than if QAI sprang from some laboratory ex nihilo.

Case Study: The Quantum Governance Stack

Elija Perrier

Project Q – Centre for International Security Studies

University of Sydney

2023

I. Stakeholder Principles

The quantum governance stack is an actor-instrument model for quantum governance across a governance hierarchy from states and governments through to public and private institutions. While technology governance is a vast discipline, owing to the nascence of quantum information technologies (QIT) and their developmental trajectory, the development of quantum-specific governance frameworks has hitherto been limited. The governance of quantum information technologies (QIT) comprises heterogeneous objectives, such as the development of QIT themselves, typical considerations that apply to any technology along with quantum-specific risks (such as relating to decryption). The multi-objective nature of quantum governance, and that fact that not all objectives are necessarily consistent with each other, means that any governance framework covering quantum technology must provide a means of considering and reconciling trade-offs between competing objectives. Our focus is analysing how existing governance frameworks provide models which may be adapted, reproduced or modified to provide best-practice responses to QIT. To this end, our paper contributes the following: (i) situating the governance of quantum systems within existing technology governance instruments, such as international treaties, legislative regimes and regulation; (ii) a comparative analysis of the governance of QIT by comparison with similar information and engineering technologies; (iii) a survey of different quantum stakeholders, their objectives and their imperatives; and (iv) providing practical guidance for quantum stakeholders.

Even before considering the form of governance for QIT, we must address two threshold questions: (1) why, despite uncertainty around QIT’s technological development, is discussion of QIT governance well-motivated?; and (2) whether QIT requires different, new or novel governance that does not already exist within the technology governance landscape? We answer both of these questions in the affirmative. Firstly, quantum governance is, we argue, well-motivated owing to the potentially far-reaching consequences of QIT (such as on encryption systems and its effect on computing power, making problems that are currently practically or theoretically intractable potentially solvable). Secondly, we argue that quantum governance is sui generis, mandating its own specific instruments (such as policies, treaties, risk assessments etc) due to (i) the need to prescribe and potentially proscribe uses of QIT beyond merely specifying non-quantum specific principles to do no harm, for example; and (ii) because quantum-specific governance instruments and research will enable such instruments to align with QIT ecosystems as they develop.

We organise the governance stack around different governance instruments for managing multiple stakeholder rights, interests, duties and obligations. Our method represents an instrumentalist approach to governance, concentrating upon the types of instruments, such as treaties, legislation, protocols, policies and procedures, which can be used to regulate (formally and informally) relations among stakeholders, including by providing means by which rights and obligations are negotiated and disputes or differences resolved.

II. Quantum Governance Stakeholders

Our approach adopts the following taxonomy: (a) states (governments) as the primary agents of regulation, and their role in (i) international and (ii) national formal (legislative) regulation; (b) multilateral institution(s); (c) national instrumentalities, such as parliaments and administrative agencies; (d) industrial and commercial stakeholders; (e) universities and academia; (f) individual producers/consumers of QIT and (g) civil society and technical community groups. By quantum, we mean quantum information technologies, principally quantum computing, communication and quantum sensing that are fundamentally characterised by their informational processing properties; by governance, we mean both ‘hard’ formal enforceable rules and ‘soft’ power or influence driven by particular objectives or outcomes; by models, we mean an idealised methodology and structure for what different parts of the governance stack would ideally resemble. A diagram of stakeholders and governing instruments is below.

III. Quantum Governance Process

The quantum governance stack model involves (1) identifying categories of ‘quantum stakeholders’; (2) engaging with stakeholders around QIT; (3) identifying the interests (objectives), rights and duties of such stakeholders; (4) identifying how QIT affects such stakeholder rights and what stakeholder duties are with respect to QIT; (5) undertaking risk management assessments of such impacts; (6) identifying existing or newly required governance instruments appropriate or relevant to managing, mitigating and controlling such risks; (7) drafting or building such instruments in a consultative manner; (8) reviewing such proposed instruments for consistency, feasibility and checking they are fit for purpose and (9) finalising such governance architecture along with undertaking its implementation (involving monitoring, reporting, auditing).

Such QIT governance procedures are for use by multiple stakeholders (not just governments) and reflect an adaptation of typical deliberative and inclusive governance. A diagram of this approach is set out below.

Quantum Governance Instruments

Quantum governance stakeholders concern relations among stakeholders which are mediated via specific governance instruments. These include: (i) inter-state relations are mediated via public international law or multilateral instruments; (ii) government instruments include legislation, executive instruments or policy. Within states, various institutions may contribute to the governance stack, including (iii) technical communities, (iv) commercial private sector organisations, (v) public institutions, (vi) individuals and (vii) civil society groups. A table of specific instruments for each sector is set out below.

IV. Quantum Governance & Risk Management: Corporations

An example of the quantum governance stack application includes its use in corporate and commercial institutions. Commercial private sector actors are increasingly important in the QIT ecosystem globally. By private sector, we primarily focus on corporations, including ‘quantum startups’, such as PsiQuantum, Xanadu and Rigetti but also established companies such as Google, IBM, Amazon and Honeywell who are undertaking considerable investment, research and development in the quantum sector. The quantum governance stack approach for such stakeholders involves once more accounting for the overall objectives of such stakeholders, such as maximising profit, growing a business, building scalable or useful products, along with the rights and interests of such stakeholders and those affected by their actions.

The governance of corporations, businesses and companies is mandated by municipal business law, such as corporations’ law statutes and regulations. Corporations, for example, are accorded their own distinct legal personhood but must act through the delegated authority of agents (such as officers, contractors or employees) who are bound by typical duties such as the duty to act in the best interests of the company, or other fiduciary-style duties. Company governance is also manifest via company policies that set out practices, routines and procedures. Such policies in turn usually encode or specify how risk is managed within the organisation. Thus, the governance of QIT-related activities of corporations would occur (a) externally, via the imposition of regulation and (b) internally, via the formulation of internal policies must take into account such fundamental business objectives. Most companies involved in the quantum sector have business objectives aligning with the development of (i) quantum hardware, (ii) quantum software or (iii) infrastructure related to QIT, such as that related to quantum sensing or communication. Thus, their objectives and interests align broadly with the development objectives common across other quantum stakeholders. Companies are also necessarily interested in security.

From an idealised quantum governance stack perspective, the answer is to be found in existing firm-level risk management protocols. These set out their risk policies and include relevant intra-institutional delegation of responsibilities for management, oversight and control of risks (such as to compliance functions or officers). Moreover, specific policy frameworks that instil auditing, checks and review against governance outcomes both (a) in technical product design/development stages and (b) when products are being approved for release, can be leveraged or adapted to handle quantum-specific risks. An abstraction of firm risk management for quantum is set out below.